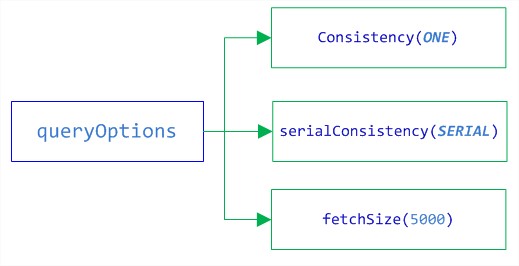

QueryOption:

FetchSize:

statement级别,控制结果Result Set的分页基准,例如以1000为例,如果结果有2500笔记录,则:

List<Row> all = execute.all(); 返回全部结果需要查询3次,所以这个数值不能设置的太低,也不能太高,太低时,需要读取全部结果时,需要查询 结果总数/分页Size,如果太高,则一次返回的结果数太多。

Consistency:

[以下摘自网络文献]

Ø 一致性

l Tuneable一致性

提供partition容错。用户可以以单个操作为基础决定需多少个节点接收DML操作或响应SELECT操作。

l Linearizable一致性:关于分布式系统的一致性可参考文章:分布式系统中的一致性模型

l 轻量事务(compare-and-set)的一系列隔离级别。在tuneable一致性不足以满足要求时使用,如执行无间断的相继操作或同时/不同时运行一个操作产生同样的结果。Cassandra2.0使用类似2-phase commit的Paxos consensus协议实现Linearizable一致性。(为支持该一致性引入了SERIAL类型的consistency level及在CQL中使用了带IF从句的轻量事务),可参考文章:http://www.datastax.com/dev/blog/lightweight-transactions-in-cassandra-2-0

轻量事务

Cassandra2.0中引入,弥补Tuneable一致性。

n INSERT INTO emp(empid,deptid,address,first_name,last_name) VALUES(102,14,’luoyang’,’Jane Doe’,’li’) IF NOT EXISTS;

n UPDATE emp SET address = ‘luoyang’ WHERE empid = 103 and deptid = 16IF last_name=’zhang’;

API定义:

Sets the serial consistency level for the query. The serial consistency level is only used by conditional updates (so INSERT, UPDATE and DELETE with an IF condition). For those, the serial consistency level defines the consistency level of the serial phase (or “paxos” phase) while the normal consistency level defines the consistency for the “learn” phase, i.e. what type of reads will be guaranteed to see the update right away. For instance, if a conditional write has a regular consistency of QUORUM (and is successful), then a QUORUM read is guaranteed to see that write. But if the regular consistency of that write is ANY, then only a read with a consistency of SERIAL is guaranteed to see it (even a read with consistency ALL is not guaranteed to be enough).

The serial consistency can only be one of ConsistencyLevel.SERIAL or ConsistencyLevel.LOCAL_SERIAL. While ConsistencyLevel.SERIAL guarantees full linearizability (with other SERIAL updates), ConsistencyLevel.LOCAL_SERIAL only guarantees it in the local data center.

The serial consistency level is ignored for any query that is not a conditional update (serial reads should use the regular consistency level for instance).

- Parameters:

- serialConsistency the serial consistency level to set.

- Returns:

- this

Statementobject. - Throws:

- IllegalArgumentException – if

serialConsistencyis not one ofConsistencyLevel.SERIALorConsistencyLevel.LOCAL_SERIAL.

从API定义中,我们可以总结3个要点:

(1) The serial consistency level is only used by conditional updates (so INSERT, UPDATE and DELETE with an IF condition)

(2)SERIAL guarantees full linearizability (with other SERIAL updates), ConsistencyLevel.LOCAL_SERIAL only guarantees it in the local data center.

(3)The serial consistency level is ignored for any query that is not a conditional update (serial reads should use the regular consistency level for instance).

Datastax中配置的所有级别:

ANY (0), ONE (1), TWO (2), THREE (3), QUORUM (4), ALL (5), LOCAL_QUORUM (6), EACH_QUORUM (7), SERIAL (8), LOCAL_SERIAL (9), LOCAL_ONE (10);

Datastax doc:

About write consistency¶

The consistency level determines the number of replicas on which the write must succeed before returning an acknowledgment to the client application. ¶

| Level | Description | Usage |

|---|---|---|

| ALL | A write must be written to the commit log and memtable on all replica nodes in the cluster for that partition. | Provides the highest consistency and the lowest availability of any other level. |

| EACH_QUORUM | Strong consistency. A write must be written to the commit log and memtable on a quorum of replica nodes in all data centers. | Used in multiple data center clusters to strictly maintain consistency at the same level in each data center. For example, choose this level if you want a read to fail when a data center is down and the QUORUM cannot be reached on that data center. |

| QUORUM | A write must be written to the commit log and memtable on a quorum of replica nodes. |

Provides strong consistency if you can tolerate some level of failure. |

| LOCAL_QUORUM | Strong consistency. A write must be written to the commit log and memtable on a quorum of replica nodes in the same data center as the coordinator node. Avoids latency of inter-data center communication. | Used in multiple data center clusters with a rack-aware replica placement strategy ( NetworkTopologyStrategy) and a properly configured snitch. Fails when usingSimpleStrategy. Use to maintain consistency locally (within the single data center). |

| ONE | A write must be written to the commit log and memtable of at least one replica node. | Satisfies the needs of most users because consistency requirements are not stringent. |

| TWO | A write must be written to the commit log and memtable of at least two replica nodes. | Similar to ONE. |

| THREE | A write must be written to the commit log and memtable of at least three replica nodes. | Similar to TWO. |

| LOCAL_ONE | A write must be sent to, and successfully acknowledged by, at least one replica node in the local data center. | In a multiple data center clusters, a consistency level of ONE is often desirable, but cross-DC traffic is not. LOCAL_ONE accomplishes this. For security and quality reasons, you can use this consistency level in an offline datacenter to prevent automatic connection to online nodes in other data centers if an offline node goes down. |

| ANY | A write must be written to at least one node. If all replica nodes for the given partition key are down, the write can still succeed after a hinted handoff has been written. If all replica nodes are down at write time, an ANY write is not readable until the replica nodes for that partition have recovered. | Provides low latency and a guarantee that a write never fails. Delivers the lowest consistency and highest availability. |

| SERIAL | Achieves linearizable consistency for lightweight transactions by preventing unconditional updates. | You cannot configure this level as a normal consistency level, configured at the driver level using the consistency level field. You configure this level using the serial consistency field as part of the native protocol operation. See failure scenarios. |

| LOCAL_SERIAL | Same as SERIAL but confined to the data center. A write must be written conditionally to the commit log and memtable on a quorum of replica nodes in the same data center. | Same as SERIAL. Used for disaster recovery. See failure scenarios. |

Even at low consistency levels, the write is still sent to all replicas for the written key, even replicas in other data centers. The consistency level just determines how many replicas are required to respond that they received the write.

l ANY:write至少在一个replica成功。即使所有replica 都down掉,在写hinted handoff后write仍成功。在replica恢复后该write可读。

l ONE:write必须成功写入至少一个replica的commit log和memtable。

l TWO:至少两个

l THREE:至少三个

l QUORUM:至少(replication_factor/ 2) + 1个

l LOCAL_QUORUM:至少(replication_factor/ 2) + 1个,且与协调者处于同一数据中心

l EACH_QUORUM:所有数据中心,至少(replication_factor/ 2) + 1个

l ALL:全部

l SERIAL:至少(replication_factor/ 2) + 1个,用于达成轻量事务的linearizable consistency

需注意的是:实际上write还是会被发到所有相关的replica中,一致性级别只是确定必需要反馈的replica数。

SERIAL and LOCAL_SERIAL write failure scenarios¶

If one of three nodes is down, the Paxos commit fails under the following conditions:

- CQL query-configured consistency level of ALL

- Driver-configured serial consistency level of SERIAL

- Replication factor of 3

A WriteTimeout with a WriteType of CAS occurs and further reads do not see the write. If the node goes down in the middle of the operation instead of before the operation started, the write is committed, the value is written to the live nodes, and a WriteTimeout with a WriteType of SIMPLE occurs.

Under the same conditions, if two of the nodes are down at the beginning of the operation, the Paxos commit fails and nothing is committed. If the two nodes go down after the Paxos proposal is accepted, the write is committed to the remaining live nodes and written there, but a WriteTimeout with WriteType SIMPLE is returned.

About read consistency¶

The consistency level specifies how many replicas must respond to a read request before returning data to the client application.

Cassandra checks the specified number of replicas for data to satisfy the read request.

| Level | Description | Usage |

|---|---|---|

| ALL | Returns the record after all replicas have responded. The read operation will fail if a replica does not respond. | Provides the highest consistency of all levels and the lowest availability of all levels. |

| EACH_QUORUM | Returns the record once a quorum of replicas in each data center of the cluster has responded. | Same as LOCAL_QUORUM |

| QUORUM | Returns the record after a quorum of replicas has responded from any data center. | Ensures strong consistency if you can tolerate some level of failure. |

| LOCAL_QUORUM | Returns the record after a quorum of replicas in the current data center as thecoordinator node has reported. Avoids latency of inter-data center communication. | Used in multiple data center clusters with a rack-aware replica placement strategy ( NetworkTopologyStrategy) and a properly configured snitch. Fails when usingSimpleStrategy. |

| ONE | Returns a response from the closest replica, as determined by the snitch. By default, a read repair runs in the background to make the other replicas consistent. | Provides the highest availability of all the levels if you can tolerate a comparatively high probability of stale data being read. The replicas contacted for reads may not always have the most recent write. |

| TWO | Returns the most recent data from two of the closest replicas. | Similar to ONE. |

| THREE | Returns the most recent data from three of the closest replicas. | Similar to TWO. |

| LOCAL_ONE | Returns a response from the closest replica in the local data center. | Same usage as described in the table about write consistency levels. |

| SERIAL | Allows reading the current (and possibly uncommitted) state of data without proposing a new addition or update. If a SERIAL read finds an uncommitted transaction in progress, it will commit the transaction as part of the read. Similar to QUORUM. | To read the latest value of a column after a user has invoked a lightweight transaction to write to the column, use SERIAL. Cassandra then checks the inflight lightweight transaction for updates and, if found, returns the latest data. |

| LOCAL_SERIAL | Same as SERIAL, but confined to the data center. Similar to LOCAL_QUORUM. | Used to achieve linearizable consistency for lightweight transactions. |

About the QUORUM level¶

The QUORUM level writes to the number of nodes that make up a quorum. A quorum is calculated, and then rounded down to a whole number, as follows:

(sum_of_replication_factors / 2) + 1

The sum of all the replication_factor settings for each data center is the sum_of_replication_factors.

For example, in a single data center cluster using a replication factor of 3, a quorum is 2 nodes―the cluster can tolerate 1 replica nodes down. Using a replication factor of 6, a quorum is 4―the cluster can tolerate 2 replica nodes down. In a two data center cluster where each data center has a replication factor of 3, a quorum is 4 nodes―the cluster can tolerate 2 replica nodes down. In a five data center cluster where each data center has a replication factor of 3, a quorum is 8 nodes.

If consistency is top priority, you can ensure that a read always reflects the most recent write by using the following formula:

(nodes_written + nodes_read) > replication_factor

For example, if your application is using the QUORUM consistency level for both write and read operations and you are using a replication factor of 3, then this ensures that 2 nodes are always written and 2 nodes are always read. The combination of nodes written and read (4) being greater than the replication factor (3) ensures strong read consistency.

总结:

1 serial consistency默认为serial, 只针对if condition操作有效,如果没有用到if可无视。

2 all consistency不是指所有的Node,而是所有replicated node: 可参考其他文献中的说明:

In Cassandra, writing with a consistency level of ALL means that the data will be written to all N nodes responsible for the particular piece of data, where N is the replication factor, before the client gets a response. In a standard Cassandra configuration, the write goes into an in-memory table and an in-memory log for each node. The log is periodically batch flushed to disk; there is also an option to flush per commit, but this option severely impacts performance. Subsequent reads from any node are strongly consistent and get the most recent update.

2 serial consistency只针对INSERT, UPDATE and DELETE,例如在下列的Update中使用onlyif().

QueryBuilder

.update(keyspace, table)

.with(QueryBuilder.set(key, value)).onlyIf(condition).where(QueryBuilder.eq(id.toString(), value))